Virtualization and Cloud Computing

What is virtualization?

In a nutshell,

virtualization is software that separates physical infrastructures to create

various dedicated resources. It is the fundamental technology that powers cloud

computing.

"Virtualization

software makes it possible to run multiple operating systems and multiple

applications on the same server at the same time," said Mike Adams, director of product

marketing at VMware, a pioneer in virtualization and cloud software and

services. "It enables businesses to reduce IT costs while increasing the

efficiency, utilization and flexibility of their existing computer

hardware."

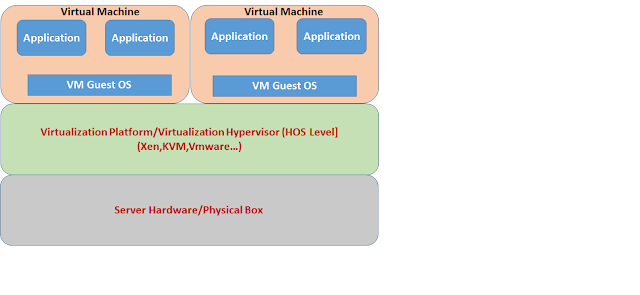

The technology behind

virtualization is known as a virtual machine monitor (VMM) or virtual manager,

which separates compute environments from the actual physical infrastructure.

Virtualization makes servers, workstations, storage and

other systems independent of the physical hardware layer, said John Livesay, vice president of InfraNet,

a network infrastructure services provider. "This is done by installing a

Hypervisor on top of the hardware layer, where the systems are then

installed."

Definition

•

Virtualization is the ability to run multiple

operating systems on a single physical system and share the underlying hardware

resources.

•

It is the process by which one computer hosts

the appearance of many computers.

•

Virtualization is used to improve IT

throughput and costs by using physical resources as a pool from which virtual

resources can be allocated.

The Pros of Virtual Machines

1. Less physical hardware.

2. Central location to manage all assets. All of

your virtual machines can be managed from one location.

3.

More eco-friendly. If you look at your

current configuration, most of your machines are idling along. But, with them

virtualized and running on a cluster, you maximize your machines’ potential

while saving money on energy costs.

4.

Disaster recovery is quick. Re-deploy virtual

machines on your system (once you get the host machine back online) and you can

have your system back up and running in no time.

5.

Expansion potentials. With the infrastructure

in place, it’s simply a matter of deploying a new machine and configuring. No

need to go buy new servers (assuming you didn’t cheap out and buy bottom of the

line servers).

6.

System upgrades. The time and heartache of

making system images before applying a patch and having a system restore fail

are all realities. With the virtual environment, if something goes wrong while

applying a patch or update, you can simply roll the virtual machine back to

where it was before you applied the patch using a snapshot.

7.

Software licensing. Many software packages

(such as Rockwell products) tie a license key to a hard drive ID. In a virtual

environment, the hard drive ID stays the same no matter which piece of hardware

it is running on.

8.

Supports legacy operating systems. As

hardware evolves and operating systems become obsolete, it’s harder to find

hardware and software that are compatible. Virtualizing these machines

eliminates the operating system compatibility problems. This doesn’t fix the

problem of obsolete operating systems that are no longer supported – which is a

security risk.

9.

Forward compatibility. As new hardware

becomes available, your virtual machines can still run on this new hardware (as

long as it is supported by the virtual host software).

10. Use

of thin clients. Using a thin client manager, replacement of a bad terminal is

as easy as a few clicks and powering on the new unit. Conversely, with a

physical machine you’re stuck with re-imaging or building a replacement from

scratch.

11. Many

of the pros are related to the VMware ESXi/Sphere platform. They’ve done a

great job of packing high availability features into their product:

12. Monitor

if a virtual machine has stopped running and restart automatically (App High

Availability).

13. The

ability to move a virtual machine running on one server to another without

shutting down the guest virtual machine (VMotion).

14. The

ability to move a virtual machine running on one SAN to another without

shutting down the guest machine (Storage VMotion).

15. Automatically

power on a guest machine on boot of the server.

16. For

a more extensive list of features check out the VMWare website.

The Cons of Virtual Machines

1.

Cost. The upfront cost can be much higher

and, depending on how high of an availability you want, you’ll need to be

willing to design the system for your needs now and in the future.

2.

Complexity. If you’re not familiar with the

hardware and network aspects of the whole setup, it can be a daunting task to

figure out. Routing rules and VLAN’s continue to add complexity, especially if

security is a concern.

3.

Often the hardware is bundled together in one

location making a single disaster more likely to cause significant down time.

However, there are ways around this.

4.

Hardware keys. Yes, you can use hardware

keys. You can bind a USB port to a specific virtual machine. However, you are

not able to move the virtual machine without physically moving the key as well.

5.

Add-on hardware. In the past, you weren’t

able to add on older PCI hardware and share it with the virtual machine. This

has changed, but it doesn’t work 100% of the time. I’d recommend testing it

thoroughly before deploying. Of course, this also limits which machine a

virtual machine can run on because it will need to be bound to that piece of

hardware.

The Traditional Server Concept

l System

Administrators often talk about servers as a whole unit that includes the

hardware, the OS, the storage, and the applications.

l Servers

are often referred to by their function i.e. the Exchange server, the SQL

server, the File server, etc.

l If

the File server fills up, or the Exchange server becomes overtaxed, then the

System Administrators must add in a new server.

l Unless

there are multiple servers, if a service experiences a hardware failure, then

the service is down.

l System

Admins can implement clusters of servers to make them more fault tolerant. However, even clusters have limits on their

scalability, and not all applications work in a clustered environment.

Pros

l Easy

to conceptualize

l Fairly

easy to deploy

l Easy

to backup

l Virtually

any application/service can be run from this type of setup

Cons

l Expensive

to acquire and maintain hardware

l Not

very scalable

l Difficult

to replicate

l Redundancy

is difficult to implement

l Vulnerable

to hardware outages

l In

many cases, processor is under-utilized

The Virtual Server Concept

l Virtual

servers seek to encapsulate the server software away from the hardware

l This

includes the OS, the applications, and the storage for that server.

l Servers

end up as mere files stored on a physical box, or in enterprise storage.

l A

virtual server can be serviced by one or more hosts, and one host may house

more than one virtual server.

l Virtual

servers can still be referred to by their function i.e. email server, database

server, etc.

l If

the environment is built correctly, virtual servers will not be affected by the

loss of a host.

l Hosts

may be removed and introduced almost at will to accommodate maintenance.

l Virtual

servers can be scaled out easily.

l If

the administrators find that the resources supporting a virtual server are

being taxed too much, they can adjust the amount of resources allocated to that

virtual server

l Server

templates can be created in a virtual environment to be used to create

multiple, identical virtual servers

l Virtual

servers themselves can be migrated from host to host almost at will.

Pros

l Resource

pooling

l Highly

redundant

l Highly

available

l Rapidly

deploy new servers

l Easy

to deploy

l Reconfigurable

while services are running

l Optimizes

physical resources by doing more with less

Cons

l Slightly

harder to conceptualize

l Slightly

more costly (must buy hardware, OS, Apps, and now the abstraction layer)

Virtualization Status

l Offerings

from many companies

l e.g.

VMware, Microsoft, Sun, ...

l Hardware

support

l Fits

well with the move to 64 bit (very large memories) multi-core (concurrency)

processors.

l Intel

VT (Virtualization Technology) provides hardware to support the Virtual Machine

Monitor layer

l Virtualization

is now a well-established technology

Hypervisor

•

A hypervisor or virtual machine

manager/monitor (VMM), or virtualization manager, is a program that allows

multiple operating systems to share a single hardware host.

•

Each guest operating system appears to have

the host's processor, memory, and other resources all to itself. However, the

hypervisor is actually controlling the host processor and resources, allocating

what is needed to each operating system in turn and making sure that the guest

operating systems (called virtual machines) cannot disrupt each other.

Hypervisor: A high-level explanation

The evolution of virtualization

greatly revolves around one piece of very important software. This is the hypervisor. As an integral component, this software

piece allows for physical devices to share their resources amongst virtual

machines running as guests on to top of that physical hardware. To further

clarify the technology, it’s important to analyze a few key definitions:

§ Type I Hypervisor: This type of hypervisor is deployed as a bare-metal

installation. This means that the first thing to be installed on a server as

the operating system will be the hypervisor. The benefit of this software is

that the hypervisor will communicate directly with the underlying physical

server hardware. Those resources are then paravirtualized and delivered to the running VMs. This is

the preferred method for many production systems.

§ Type II Hypervisor: This model is also known as a hosted hypervisor. The

software is not installed onto the bare-metal, but instead is loaded on top of

an already live operating system. For example, a server running Windows Server

2008R2 can have VMware Workstation 8 installed on top of that OS. Although

there is an extra hop for the resources to take when they pass through to the

VM – the latency is minimal and with today’s modern software enhancements, the

hypervisor can still perform optimally.

§ Guest Machine: A guest machine, also known as a virtual

machine (VM) is the

workload installed on top of the hypervisor. This can be a virtual appliance,

operating system or other type of virtualization-ready workload. This guest

machine will, for all intents and purposes, believe that it is its own unit

with its own dedicated resources. So, instead of using a physical server for

just one purpose, virtualization allows for multiple VMs to run on top of that

physical host. All of this happens while resources are intelligently shared

between other VMs.

§ Host Machine: This is known as the physical host. Within

virtualization, there may be several components – SAN, LAN, wiring, and so on.

In this case, we are focusing on the resources located on the physical server.

The resource can include RAM and CPU. These are then divided between VMs and

distributed as the administrator sees fit. So, a machine needing more RAM (a

domain controller) would receive that allocation, while a less important VM (a

licensing server for example) would have fewer resources. With today’s

hypervisor technologies, many of these resources can be dynamically allocated.

§ Paravirtualization Tools: After the guest VM is installed on top of the

hypervisor, there usually is a set of tools which are installed into the guest

VM. These tools provide a set of operations and drivers for the guest VM to run

more optimally. For example, although natively installed drivers for a NIC will

work, paravirtualized NIC drivers will communicate with the underlying physical

layer much more efficiently. Furthermore, advanced networking configurations

become a reality when paravirtualized NIC drivers are deployed.

Virtualization in Cloud Computing

Cloud computing takes virtualization one step further:

•

You

don’t need to own the hardware

•

Resources

are rented as needed from a cloud

•

Various

providers allow creating virtual servers:

–

Choose

the OS and software each instance will have

–

The

chosen OS will run on a large server farm

–

Can

instantiate more virtual servers or shut down existing ones within minutes

•

You

get billed only for what you used

Suppose you are XYZ.com

XYZ's Solution

l Host the web site in Amazon's EC2

Elastic Compute Cloud

l Provision new servers every day,

and deprovision them every night

l Pay just $0.10* per server per

hour

l * more for higher capacity

servers

Let Amazon

worry about the hardware!

Cloud

computing

Cloud computing, also known as on-demand computing, is a kind of

Internet-based computing, where shared resources, data and information are

provided to computers and other devices on-demand. It is a model for enabling

ubiquitous, convenient, on-demand network access to a shared pool of

configurable computing resources (e.g., networks, servers, storage,

applications and services) that can be rapidly provisioned and released with

minimal management effort. Cloud computing and storage solutions provide users

and enterprises with various capabilities to store and process their data in

third-party data centers. It relies on sharing of resources to achieve

coherence and economies of scale, similar to a utility (like the electricity

grid) over a network. At the foundation of cloud computing is the broader

concept of converged infrastructure and shared services.

Cloud computing takes virtualization to the next step

l You don’t have to own the

hardware

l You “rent” it as needed from a

cloud

l There are public clouds

l e.g. Amazon EC2, and now many others

(Microsoft, IBM, Sun, and others ...)

l A company can create a private

one

l With more control over security,

etc.

Goal 1 – Cost Control

l Cost

l Many systems have variable

demands

l Batch processing (e.g. New York

Times)

l Web sites with peaks (e.g.

Forbes)

l Startups with unknown demand

(e.g. the Cash for Clunkers program)

l Reduce risk

l Don't need to buy hardware until

you need it

Goal 2 - Business Agility

l More than scalability - elasticity!

l Ely Lilly in rapidly changing

health care business

l Used to take 3 - 4 months to give

a department a server cluster, then they would hoard it!

l Using EC2, about 5 minutes!

l And they give it back when they

are done!

l Scaling back is as important as

scaling up

Goal 3 - Stick to Our Business

l Most companies don't WANT to do

system administration

l Forbes says:

l We are is a publishing company,

not a software company

l But beware:

l Do you really save much on sys

admin?

l You don't have the hardware, but

you still need to manage the OS!

How Cloud Computing Works

l Various providers let you create

virtual servers

l Set up an account, perhaps just

with a credit card

l You create virtual servers

("virtualization")

l Choose the OS and software each

"instance" will have

l It will run on a large server

farm located somewhere

l You can instantiate more on a few

minutes' notice

l You can shut down instances in a

minute or so

l They send you a bill for what you

use

Cloud Providers

A cloud provider is a company

that offers some component of cloud computing –

typically Infrastructure as a Service (IaaS),

Software as a Service (SaaS) or Platform as a Service (PaaS) – to other businesses or individuals. Cloud

providers are sometimes referred to as cloud service

providers or CSPs.

The Best

Cloud Computing Companies

Types of cloud computing

Cloud

computing is typically classified in two ways:

•

Location

of the cloud computing

•

Type

of services offered

Location of the cloud

Cloud computing is typically classified in the following three

ways:

• Public cloud: In Public cloud the computing infrastructure is hosted by the

cloud vendor at the vendor’s premises. The customer has no visibility and

control over where the computing infrastructure is hosted. The computing

infrastructure is shared between any organizations.

• Private cloud: The computing infrastructure is dedicated to a particular

organization and not shared with other organizations. Some experts consider

that private clouds are not real examples of cloud computing. Private clouds

are more expensive and more secure when compared to public clouds. Private

clouds are of two types: On-premise private clouds and externally hosted

private clouds. Externally hosted private clouds are also exclusively used by

one organization, but are hosted by a third party specializing in cloud

infrastructure. Externally hosted private clouds are cheaper than On-premise

private clouds.

• Hybrid cloud: Organizations may host critical applications on private clouds

and applications with relatively less security concerns on the public cloud.

The usage of both private and public clouds together is called hybrid cloud. A

related term is Cloud Bursting. In Cloud bursting organization use their own

computing infrastructure for normal usage, but access the cloud using services

like Salesforce cloud computing for high/peak load requirements. This ensures

that a sudden increase in computing requirement is handled gracefully.

• Community cloud: involves sharing of computing infrastructure in between

organizations of the same community. For example all Government organizations

within the state of California may share computing infrastructure on the cloud

to manage data related to citizens residing in California.

Type of services offered

Classification

based upon service provided.Based upon the services offered, clouds are

classified in the following ways:

Infrastructure as a service

(IaaS) involves

offering hardware related services using the principles of cloud computing. These

could include some kind of storage services (database or disk storage) or

virtual servers. Leading vendors that provide Infrastructure as a service are

Amazon EC2, Amazon S3, Rackspace Cloud Servers and Flexiscale.

Platform as a Service (PaaS) involves offering a development

platform on the cloud. Platforms provided by different vendors are typically

not compatible. Typical players in PaaS are Google’s Application Engine,

Microsofts Azure, Salesforce.com’s force.com .

Software as a service (SaaS) includes a complete software

offering on the cloud. Users can access a software application hosted by the

cloud vendor on pay-per-use basis. This is a well-established sector. The

pioneer in this field has been Salesforce.coms offering in the online Customer

Relationship Management (CRM) space. Other examples are online email providers

like Googles gmail and Microsofts hotmail, Google docs and Microsofts online

version of office called BPOS (Business Productivity Online Standard Suite).

David Linthicum classification:

The above

classification is well accepted in the industry. David Linthicum describes a

more granular classification on the basis of service provided.

•

These

are listed below:

•

Storage-as-a-service

•

Database-as-a-service

•

Information-as-a-service

•

Process-as-a-service

•

Application-as-a-service

•

Platform-as-a-service

•

Integration-as-a-service

•

Security-as-a-service

•

Management/Governance-as-a-service

•

Testing-as-a-service

•

Infrastructure-as-a-service

OpenStack

OpenStack is a usual term while considering Cloud computing and

its technologies. Most people Google to know what OpenStack is. Let us

understand the basics of OpenStack.

What is OpenStack?

OpenStack is a

free and open-source software platform for cloud-computing, mostly deployed as

an infrastructure-as-a-service (IaaS). The software platform consists of

interrelated components that control hardware pools of processing, storage, and

networking resources throughout a data center. Users either manage it through a

web-based dashboard, through command-line tools, or through a RESTful API.

www.openstack.org released it under the terms of the Apache License.

Some refer OpenStack as the Operating System for Cloud while

some others say it as the software that helps to build Cloud environment. And

while considering the installation of OpenStack, we tend to call it as a

software or platform that helps to build a cloud environment.

Why OpenStack?

OpenStack is an open source, Infrastructure as a Service (Iaas) platform

that helps to create and manage scalable, elastic cloud computing for both

public and private cloud. This is aimed to support interoperability between

cloud services and to build a highly scalable and productive cloud environment.

OpenStack is freely available under the license of Apache 2.0.

OpenStack History:

Rackspace

hosting and NASA on July 2010, jointly launched OpenStack as an open-source

cloud software initiative. The project aimed at helping organizations to run

cloud computing services on a standard hardware. OpenStack is enriched with the

offerings of both the organizations; Rackspace provided the code for a powerful

storage, content delivery services and the production servers whereas the

powerful technology of Nebula (open source cloud computing program that

provides on-demand computing power for NASA researchers and scientists),

Networking and data storage cloud service was the offerings from NASA’s end.

Since September 2012, OpenStack officially became an independent non-profit

organization.

OpenStack Functionality

•

Amazon

AWS Interface Compatibility

•

Flexible

Clustering and Availability Zones

•

Access

Control List (ACL) with policies management

•

Network

Management, Security Groups, Traffic Isolation

•

Cloud

Semantics and Self-Service Capability

•

Image

registration and image attribute manipulation

•

Bucket-Based

Storage Abstraction (S3-Compatible)

•

Block-Based

Storage Abstraction (EBS-Compatible)

•

Hypervisor

support: Xen, KVM, VMware Vsphere, LXC, UML and MS HyperV

How is OpenStack used in a cloud environment?

•

The

cloud is all about providing computing for end users in a remote environment,

where the actual software runs as a service on reliable and scalable servers

rather than on each end users computer. Cloud computing can refer to a lot of

different things, but typically the industry talks about running different

items "as a service"—software, platforms, and infrastructure.

OpenStack falls into the latter category and is considered Infrastructure as a

Service (IaaS). Providing infrastructure means that OpenStack makes it easy for

users to quickly add new instance, upon which other cloud components can run.

Typically, the infrastructure then runs a "platform" upon which a

developer can create software applications which are delivered to the end

users.

OpenStack Architecture

Architecture

of OpenStack includes below components

OpenStack components

OpenStack in Different Cloud Service Provider

OpenStack components Details

KeyStone (Identity Service)

•

The

identity service performs the following functions: Tracking users and their

permissions and Providing a catalog of available services with their API

endpoints.

•

Keystone

involves the following things: User, Credentials, Tenant, Service, Role,

Service and Endpoint.

Glance

•

The

OpenStack Image Service (glance) enables users to discover, register, and

retrieve virtual machine images. It offers a REST API

that enables you to query virtual machine image metadata and retrieve an actual

image.

•

You

can store virtual machine images made available through the Image Service in a

variety of locations, from simple file systems to object-storage systems like

OpenStack Object Storage.

Compute

•

Use

OpenStack Compute to host and manage cloud computing systems. OpenStack Compute

is a major part of an Infrastructure-as-a-Service (IaaS) system.

•

OpenStack

Compute interacts with OpenStack Identity for authentication, OpenStack Image

Service for disk and server images, and OpenStack dashboard for the user and

administrative interface. Image access is limited by projects, and by users;

quotas are limited per project (the number of instances, for example).

OpenStack Compute can scale horizontally on standard hardware, and download

images to launch instances.

Neutron

•

OpenStack

Networking mainly interacts with OpenStack Compute to provide networks and

connectivity for its instances.

•

Networking

provides the networks, subnets, and routers object abstractions. Each

abstraction has functionality that mimics its physical counterpart: networks

contain subnets, and routers route traffic between different subnet and

networks.

Horizon

•

The

OpenStack dashboard, also known as Horizon, is a Web

interface that enables cloud administrators and users to manage various

OpenStack resources and services.

•

The

dashboard enables web-based interactions with the OpenStack Compute cloud

controller through the OpenStack APIs.

Heat (Orchestration)

•

The

Orchestration module provides a template-based orchestration for describing a

cloud application, by running OpenStack API calls to generate running cloud

applications. The software integrates other core components of OpenStack into a

one-file template system. The templates allow you to create most OpenStack

resource types, such as instances, floating IPs, volumes, security groups and

users. It also provides advanced functionality, such as instance high

availability, instance auto-scaling, and nested stacks. This enables OpenStack

core projects to receive a larger user base.

Ceilometer

•

The

Telemetry module performs the following functions:

•

Efficiently

polls metering data related to OpenStack services.

•

Collects

event and metering data by monitoring notifications sent from services.

•

Publishes

collected data to various targets including data stores and message queues.

Creates

alarms when collected data breaks defined rules.

Example

Comments

MAXMUNUS SOLUTIONS offer an extensive curriculum of instructor-led education options, from in-person classes with hands-on instruction to private workshops, custom training, and more, and technical designers, our training covers every key role for every Salesforce product.

Maxmunus Solutions is providing the best online salesforces cloud computing training.

For Joining online training batches please feel free to call or email us.

Name : Minati

Email: minati@maxmunus.com

Skype id- training_maxmunus

Contact No.-+9066638196/91-9738075708

Company Website:- http://www.maxmunus.com/page/Salesforce-Certification-Training